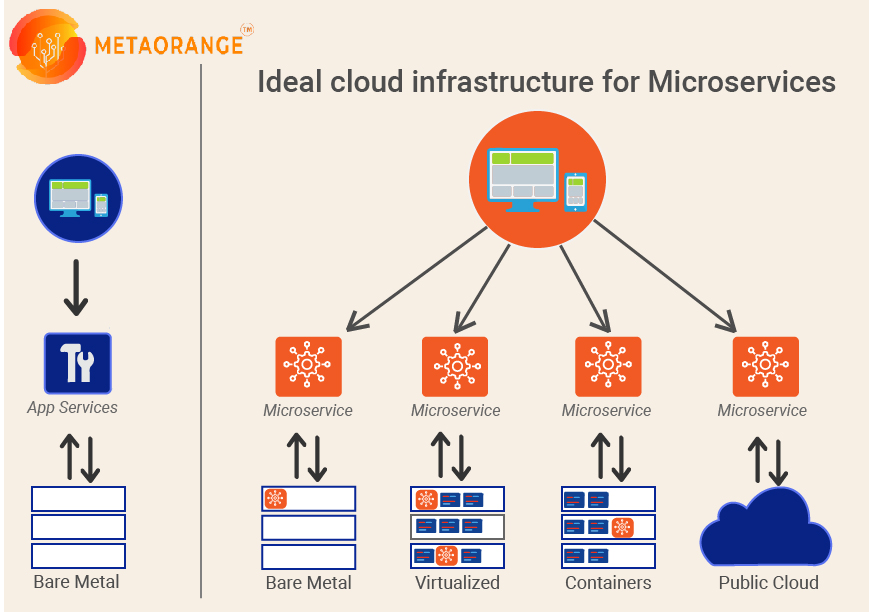

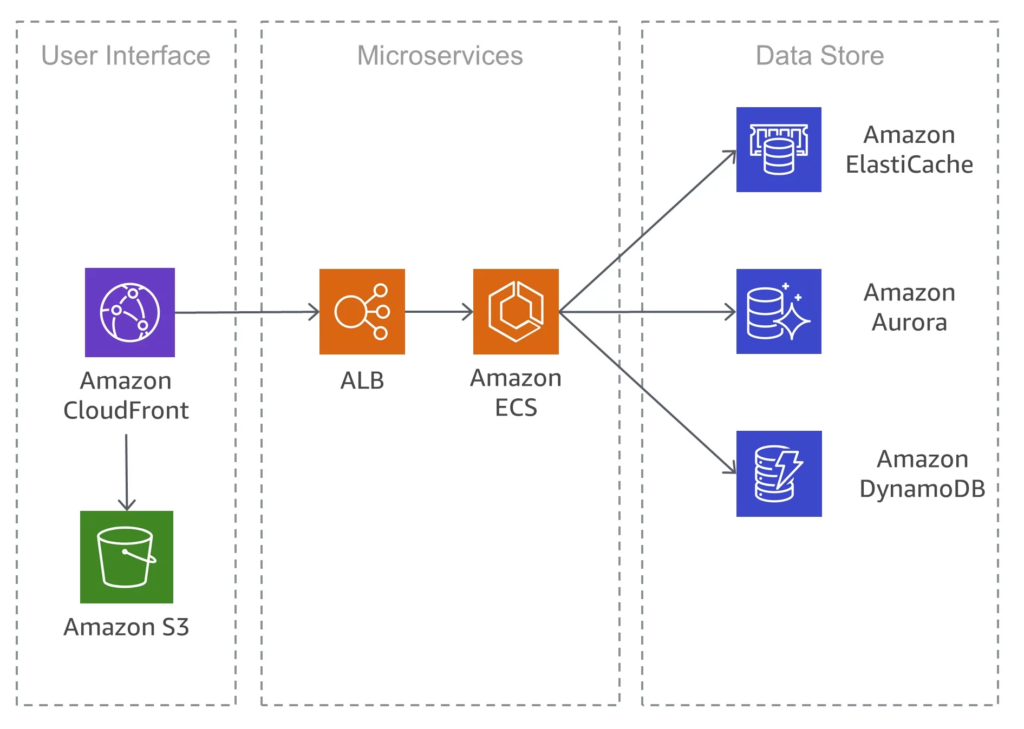

With the advent of cloud computing and the move from monolithic programs to API First Approach & Microservices. API Attacks have become a critical component in today’s digital world. As more firms provide API access to data and services, these vectors become an appealing target for data theft and malware assaults. An API allows software programs to communicate with one another by regulating how requests are made and processed.

APIs that are not secure pose a severe risk. They are frequently the most vulnerable component of a network, vulnerable to DoS assaults. Therefore, here is where the need for API security comes in. The service ensures API requests are authenticated, authorized, validated, and sanitized under load, thus providing API security. Check out the steps on how you can prevent API attacks.

Simple Steps to Prevent API Attacks

1. Evaluation of Potential API Dangers

Another vital API security strategy is conducting a risk assessment on all of the APIs in your current registry. Take precautions to guarantee they are secure and immune to any potential threats. To stay abreast of recent assaults and malicious malware, check out the “API Security.

We aim to describe a treatment strategy and the necessary controls to reduce risks to an acceptable level by conducting a risk assessment that identifies all systems and data that an API hack may affect.

Track when you conducted the reviews, and repeat checks whenever there is a change in the API or you discover new risks. Before making any further modifications to the code, it is crucial to double-check this documentation to ensure that you have taken all the necessary security and data handling measures.

2. Create a database of APIs

What is not known cannot be protected. It is crucial to keep track of all APIs in a registry, detailing details such as their names, functions, payloads, usage, access, active dates, retired dates, and owners. As a result, we won’t have to deal with any obscure APIs that may have been created due to a merger, acquisition, test, or deprecated version that nobody ever bothered to describe. The logging endeavor’s who, what, and when is vital for compliance and audit purposes and forensic analysis following a security breach.

If you want third-party developers to use your APIs in their own projects, you need to make sure they have access to thorough documentation. We should document all technical API requirements such as functions, classes, return types, arguments, and integration processes in a paper or manual linked to the registry.

3. API Runtime Security

Pay emphasis on API runtime security, which entails knowing what “normal” is like in terms of the API’s network and communication. This allows for detecting asymmetrical traffic patterns, such as those caused by a DDoS assault against the API.

Knowing the sorts of APIs you utilize is crucial since not all technologies can monitor every API. If your tool only knows GraphQL, it is overlooking two-thirds of the traffic, for instance, but the APIs are also done in REST and GPC. A device that uses machine learning or artificial intelligence to detect anomalies might be helpful for runtime security.

When a runtime security system continuously learns and detects a request from an external IP address, it can establish thresholds for aberrant traffic and take steps to shut off public access to that API.

We should send out notifications once abnormal traffic thresholds are reached by the system. They initiate either a human, semi-automated, or automatic action. DevOps should also be able to restrict, geo-fence, and outright prohibit any traffic from the outside.

Wrapping Up!

Enterprises can improve and deliver services, engage consumers, and increase efficiency. They also increase revenues through APIs, but only if they implement them safely. These steps will help you to secure API and prevent attacks. You can also seek professional help from Metaorange in implementing these steps. They are amongst the best professionals to help companies secure their APIs like a pro.