When it comes to building an application, Microservices have become the go-to structure on the current market. Despite their reputation for solving many problems, even talented professionals can face issues while using this technology. The standard examples in these problems may be investigated and reused by engineers, who may work on the application’s exhibition. Consequently, I will discuss the necessity for a configuration example in this essay on Microservices Design Patterns and the reception of anti-pattern in microservices that are enchanted dust.

Let’s hit it to understand microservices and their pattern of design a bit better.

Microservices are small self-contained administrations spread across a business. Microservices are self-contained and only do one thing. The design of microservices is composed.

Microservices can have a big impact. Microservice engineering requires understanding MSA and a few Design Patterns for Microservices.

Linking and interacting elements are frequently depicted in a pattern. Effective development/design paradigms reduce development time. Aside from that, design patterns help solve common software design issues. In a computer language, design patterns are generic solutions to issues. Patterns express ideas rather than specific procedures. The use of design patterns can help your code be more reusable.

Uses of a Microservices Design pattern are: –

Design patterns, in particular, are used to find solutions to design issues.

- To help you discover the less obvious, look for appropriate objects and the things that capture these abstractions.

- Choose an appropriate granularity for your object — patterns can assist with the compositional process.

- Define object interfaces — this will aid in the hardening of your interfaces and a list of what’s included and what isn’t

- Help you comprehend by describing object implementations and the ramifications of various approaches.

- Eliminating the need to consider what strategy is most successful by promoting reusability.

- Provide support for extensibility, which is built-in modification and adaptability.

Problems with Design

Because of this, patterns aren’t the cure-all for good: —

- Over-engineering

- Time-consuming and inconvenient

- Intricate to keep up with

Using anti-patterns — “it seemed like a good idea at the time” could lead to you installing a screw in the wrong place.

Like design patterns, Antipatterns define an industry vocabulary for the standard, flawed procedures, and implementations throughout enterprises. A higher-level language facilitates communication among software developers and allows for a concise explanation of more abstract concepts.

Microservices Antipatterns are classified as:

- What’s the reason for all of this?

- Signs — what made us realize there was a problem?

- Effects: What is the impending doom?

- A solution to the problem is a strategy for resolving the issue.

- Antipatterns That Are Frequently Seen

- Decomposition of the Functions — -a programmer whose mentality is still firmly fixed on procedural programming

- This creates functional classes.

- There is an excessive amount of decay taking place.

- Blocked in procedural thinking,

- the creation of a single course encompassing all of the requirements

- Decomposition is not occurring fast enough.

Let’s take a look at some real-world examples that can help you design and execute microservices:

The diplomat can be used to offload everyday customer network tasks like checking and logging. It can also be used to direct and secure communications (like TLS). Sidecar transportation is frequently used to transport envoy administrations.

The anti-depreciation layer acts as a front between new and legacy applications, ensuring that inherited framework requirements do not constrain the design of a different application.

Separate backend services for different types of consumers, such as office and mobile, are created using Backends for Frontends. This eliminates the need for multiple backend administrators to deal with the conflicting requirements of various customer categories. With this example, you can isolate customer clear concerns and keep every microservice minimal.

Using a bulkhead isolates resources like the association pool, memory, and CPU. Bulkheads stop a single duty (or administration) from starving the rest of the organisation. This example shows how the framework can be applied in many situations to avoid single-help disappointments.

Door Aggregation combines all requests for different microservices into a single request, reducing confusion for buyers and administrators.

Through the employment of API doors and entryway offloading, every microservice might transfer shared assistance usefulness to an API. An example of this might be SSL endorsements.

Door Routing directs requests to various microservices using a single endpoint so that buyers do not need to keep track of numerous different endpoints.

To provide disengagement and embodiment, the sidecar transports application assistance components as a distinct holder or cycle.

Using Strangler Fig, an application’s prominent portions of usefulness are steadily replaced with new administrations, ensuring constant restructuring.

Design Patterns, on the other hand, are almost always the result of conscious choice. When we create patterns, we’re consciously deciding to make life easier for ourselves.

However, not every pattern is beneficial.

Engineers and business leaders should be wary of the anti-pattern because it could lead to further problems.

Let’s explore the anti-pattern in microservices in depth.

Anti-patterns, like patterns, are easily recognizable and reproducible. Anti-patterns are unintentional, and you only become aware of them when their consequences become apparent. In pursuit of speedier delivery, tight deadlines, and so on, people in your business frequently make well-intentioned (if misguided) decisions.

Anti-patterns are a significant roadblock for enterprises trying to make the switch to microservices design. There are some prevalent anti-patterns that I’ve noticed in firms making the conversion to microservice architecture. Ultimately, these decisions jeopardized their progress and exacerbated the issues they were attempting to solve.

An anti-pattern differs from a regular pattern in that it has three components:

- In microservice adoption, the difficulty is typically about enhancing software delivery frequency, speed, and reliability.

- An anti-pattern solution does not follow the expected pattern.

- A refactored solution provides a more practical answer to the issue.

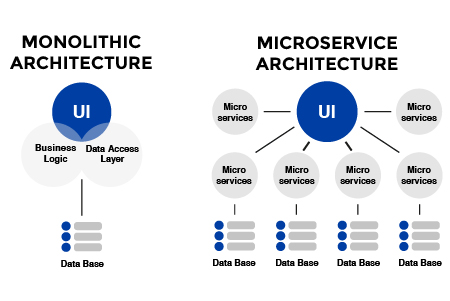

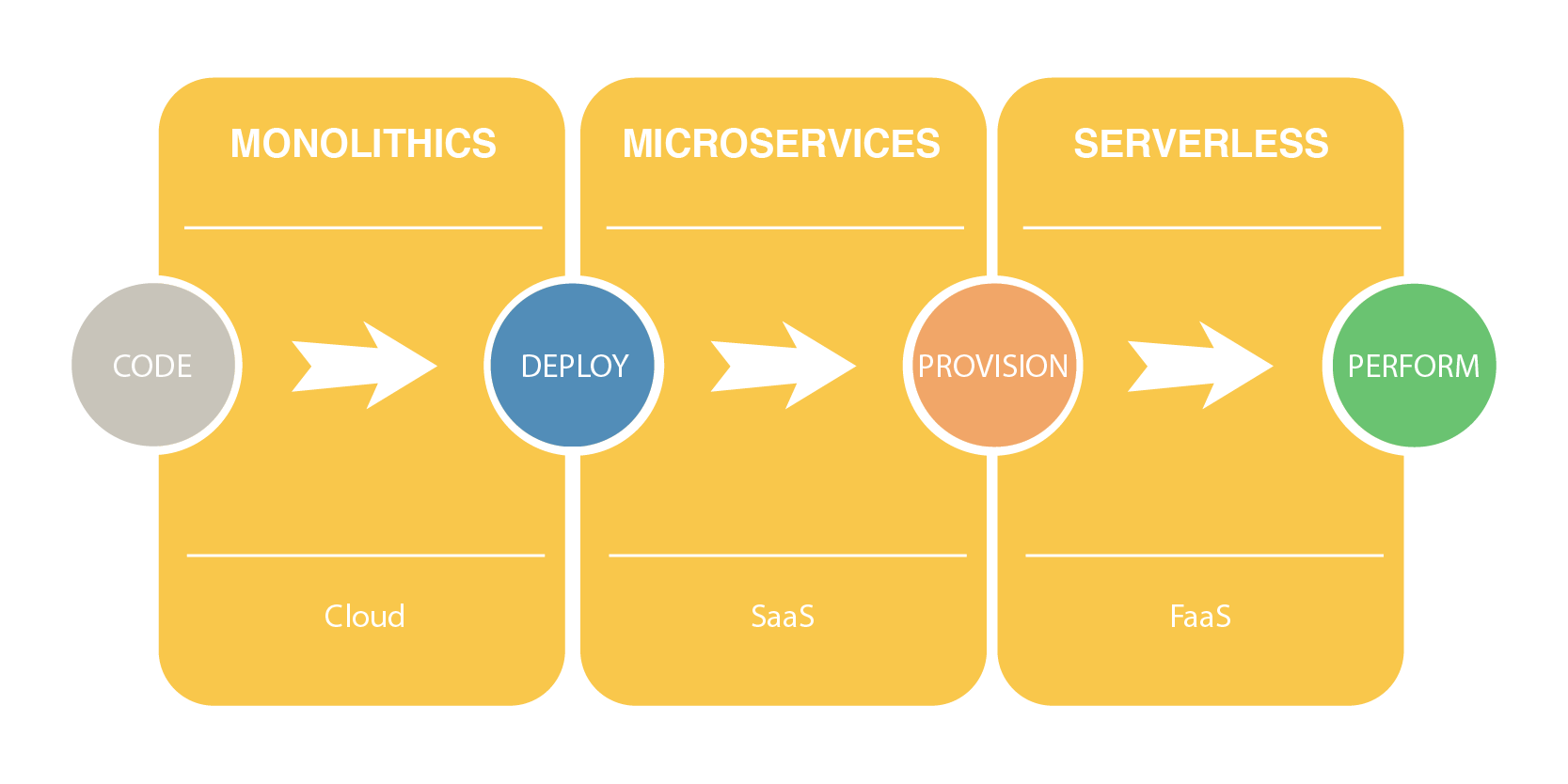

Since the advent of computers, monolithic software has been in use. Instead of only doing one thing, these programs do everything. Developers have comprehensive access to source code in these programs.

The common dependencies are also grouped in a nutshell they are:

Uniformity — To interact with the code, engineers or developers use a range of tools. Reviewing, building, and testing code are examples of this.

Awareness — All team members share monolithic software code. The rest of the team’s effort is visible.

Endurance — It is possible to build an entire project from a single repository.

Concentration — The code is accessible in one repository.

Aside from that, Google still uses a monolithic approach with one repository for all code. The issue with monolithic programs is that everyone works on the same code and database. The challenge with these applications is that small changes can have big effects. It can take hours to re-deploy, and It’s not always easy for newbies to interpret code. Monolithic apps are expensive, slow, and difficult to understand. To improve the design and architecture, various principles are used. Microservices and SOA are the newest fundamentals.

Changes in process, strategy, and structure are today as important as changes in technology. There are answers to migration concerns, but they only work in particular settings. Reusing software yields mixed results. A failure to reuse yields several unfavourable patterns.

Here’s sharing some of the well-known Anti patterns of microservices

Micro Everything

One of the most common anti-patterns is micro. This anti-pattern is frequent in business. In this situation, all microservices share a big data store. The critical problem with this anti-pattern is data tracking.

Bankrupt the Piggy

Another prevalent anti-pattern is a piggy bank. When refactoring an existing application to microservices. Refactoring is risky and takes hours or days.

Agile

Changing from waterfall to agile software development. The team starts by creating a rudimentary version of agile-fall. It’s like combining pieces that get worse over time.

Albeit, we propose a methodology for recovering a microservice-based project’s resource structure and two metrics for gauging network closeness and betweenness.

Here are a few anti-patterns:

Ambiguous Service

An operation’s name can be too long, or a generic message’s name can be vague. It’s possible to limit element length and restrict phrases in certain instances.

API Versioning

It’s possible to change an external service request’s API version in code. Delays in data processing can lead to resource problems later. Why do APIs need semantically consistent version descriptions? It’s difficult to discover bad API names. The solution is simple and can be improved in the future.

Hard code points

Some services may have hard-coded IP addresses and ports, causing similar concerns. Replace an IP address, for example, by manually inserting files one by one. The current method only recognizes hard-coded IP addresses without context.

Bottleneck services —

A service with many users but only one flaw. Because so many other clients and services use this service, the coupling is strong. The increasing number of external clients also increases response time. Due to increased traffic, several services are in short supply.

Overinflated Service

Excellent interface and data type parameters. They all use cohesiveness differently. This service output is less reusable, testable, and maintainable. For each class and parameter, the suggested method will validate service standards.

Service Chain

Also called a messaging chain. A grouping of services that share a common role. This chain appears when a client requests many services in succession.

Stovepipe Maintenance

Some functions are repeated across services. Rather than focusing on the primary purpose, these antipatterns perform utility, infrastructure, and business operations.

Knots

This antipattern consists of a collection of disjointed services. Because these poor cohesive services are tightly connected, reusability is constrained. Anti-patterns with complicated infrastructure have low availability and high response time.

To summarise,

Anti-patterns show designers how to apply and avoid anti-patterns in real-world implementations. In software development, Design Patterns can identify issues but do not provide complete answers. Programmers and others must develop and create software, sometimes breaking the rules to meet user expectations.