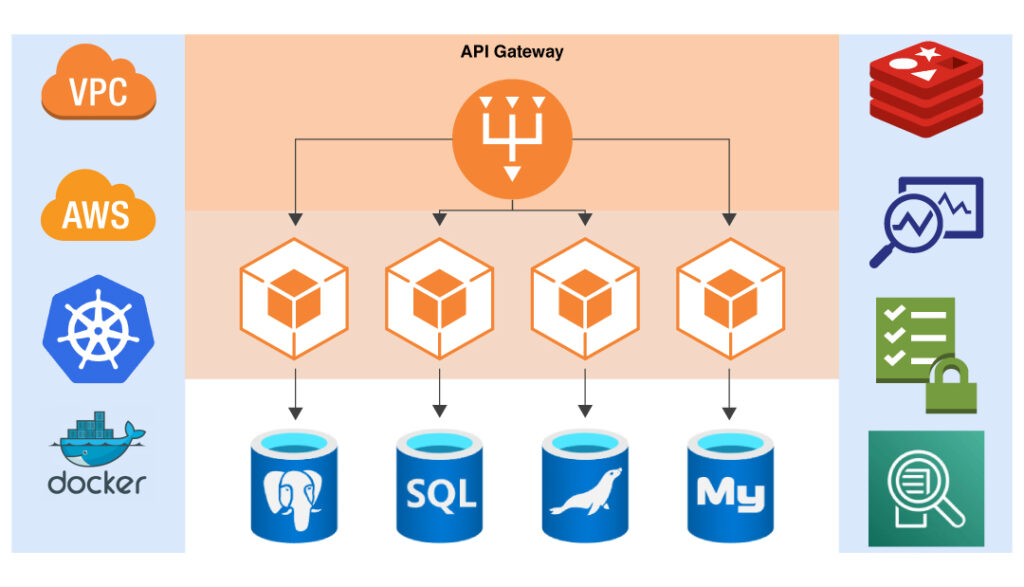

- With many businesses migrating to the cloud, there have been increased instances of attacks and exploitations on them. Making a more robust cloud infrastructure has become vital for smooth business activities. Computing Security frameworks are a set of best practices that help you streamline your security. This further optimizes your expenditure and helps you run a smooth business.

What is a Computing Security Framework?

A Cloud Security Framework is a set of documents that outline necessary tools, configurations, cloud best practices, hazard mitigation, and other best practices. It is more comprehensive than a similarly used term called cloud compliance which caters to regulatory policies.

The necessity of Cloud Security

Though cloud security is very standard during current times. It is however essential to go beyond the average standards to ensure better performance. Further, to gain the best security, there must be individual designs for each company such designs cover every aspect that is relevant to a business. This achieves two goals; to address vulnerabilities within a business type and reduce costs. Many businesses overlook the latter, resulting in an unexpected expenditures.

How to design a computing Security Framework?

- It is necessary to identify the common security standards for each industry and design a minimum standard framework. Each industry has a separate standard of cloud security. This differs because every industry faces different kinds of threats. For example, a stock exchange faces front-running attacks, whereas native blockchains face “51% attacks”.

- The next step is to address compliance regimes that local governments or industry associations mandate. The US uses a NIST-designed framework, which consists of five critical pillars. They are:

- Identify organizational requirements

- Protect self-sustaining infrastructure

- Detect security-related events

- Respond through countermeasures

- Recover system capabilities

- Next, upgrade those standard frameworks to suit threats that can make your security vulnerable. For example, businesses running wide-scale Business-2-Consumer customer service need to address DDoS attacks, which deny website access by using thousands of coordinated bot attacks.

- Make sure that you can manage, upgrade or make changes regularly that best suit the short-term and long-term goals of your business. This includes building sufficient infrastructure and having experts at the shortest notice.

- The most critical part is setting user roles. This becomes important as, during an attack, chaos ensues. Setting user roles and bringing them to speed can be done through mock drills. Many organizations also host hackathons to understand these unseen attacks and prepare for them in advance.

- Another uncommon and therefore overlooked aspect is the threat from insiders. This threat can be intentional or even an act of omission. Identifying the weak position in the talent pool is critical; otherwise, you will hamper your own efforts.

- The next step is to identify the best software, tools, web applications, and other comprehensive solutions that help recover from an attack or prevent one altogether. For example, Cloudflare helps almost all content management businesses avoid DDoS attacks. Similarly, Cisco Systems Cloudlock offers an enterprise-focused CASB solution that helps maintain data protection, threat protection, and identity security and also manages vulnerability.

- The next procedure is to document security threats that have been frequent and take steps to minimize them. Risk assessment and actions have to be in coordinated way to ensure smooth processes.

- Additionally, making a response plan is very essential in case of a security breach. Data recovery and backups help restore business activities in minimal time. Lost data can cause permanent damage to both business capabilities and reputation.

- Raising awareness is also crucial. Around 58% of cyber vulnerabilities in 2021 arose from human error. On average, IBM reports that each security breach costs more than $4 Million.

- Finally, a human-related aspect is Zero-Trust Security. This includes authentication of insider and outsider credentials who have system access. Further, these accesses and the related individuals are to be constantly validated for security access. It has to be ensured that no human has access to a system outside their authority or mandated access period.

A Brief Note on Implementation

A strategy is only as good as its implementation. Lack of effective implementation can breach even the best security frameworks. Ensuring implementation is easy when it is done on a regular basis even though the need does not arise.

Conclusion

Cloud Security Frameworks help you deal with present vulnerabilities and prepare for the future. They should be designed to fit each of the companies or businesses they are designed for. These practices help reduce costs and allocate resources where they are most needed. Finally, constant upgrades and employee awareness help these cloud security frameworks achieve the best results.

Learn More: Cloud Services of Metaorange Digital